Although AI is clever in the same way that a magician convincing you he or she is a mind reader is clever, it is not that intelligent. In fact, I should go so far as to say it is pretty stupid.

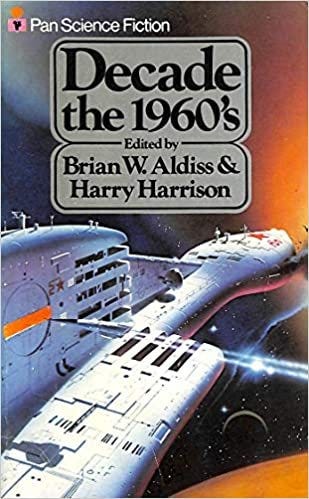

Spoiler alert: in order to discuss this story, I have had to reveal the plot and the outcome. However, I’ve provided a link to a book in which you can find the story, should you wish to read it first.

In an astonishing display of prescience, Gordon R. Dickson wrote a short story called Computers Don’t Argue — in 1965. What is significant about that date is that the story’s devastating and terrible ending is caused by an out-of-office reply — which, according to Microsoft, did not come into being until the late 1980s.

These days we have probably all experienced the frustration caused when dealing with a computer system. Quite often I end up digging around the web to find the phone number or email address of an actual person.

For example, I was trying to book a course using some credit, and the computerised system wouldn’t allow it. It took a senior administrator to override the computer system’s protocols and somehow fool the computer into thinking it wasn’t giving me credit! (She did it by changing the price of the course.)

Yesterday, the person at the checkout couldn’t scan an item from my basket because the barcode decided to down tools. In the end, she scanned a completely different product and simply altered the price.

You can’t argue with a computer, which is the whole point of this story.

It’s written in the form of an exchange of letters. (I love epistolary stories, and I love the fact that I finally have an excuse to use the word ‘epistolary’. A book club customer receives an automated letter from the company’s accounts department. It ends with the customer being executed for murder. Put like that, it sounds ludicrous. However, the writer does an excellent job of both reflecting the annoyance of dealing with a computer program that has no flexibility as well as no intelligence, and highlighting the need for programs to invite human input when the consequences of not doing so can be catastrophic. (I hope someone at the telecommunications company I use reads this.)

Since I first wrote this article, in 2022, artificial intelligence has really taken off. So would that mean that this scenario is even less likely to happen? Such is my pessimistic nature with regard to technology that I don’t think so, mainly because although AI is clever in the same way that a magician convincing you he or she is a mind reader is clever, it is not that intelligent. In fact, I should go so far as to say it is pretty stupid. I mean, you just have to consider how much effort it takes to find the right wording for a prompt that will give you exactly what you want, whereas a person would probably work it out straight away.

To take a simple example, last year I asked ChatGPT to devise an outline for a one-day course on Computing. It did a fine job, apart from the fact that it created a course that started at 8am and went all the way through to 6pm without a single break.

One of the things that Dickson highlighted, and which has not changed with the advent of AI (in fact it has, arguably, become much worse), is that you cannot argue with an algorithm — especially if you don’t know what the algorithm is or, rather, how it works. Even the people who create AI apps don’t always know how the programme came up with a solution.

This is even more concerning when you consider that some people have advocated using AI for sentencing criminals. Firstly, they argue, AI is likely to be objective. (It’s a well-known fact that you’re more likely to be given a lighter sentence if the verdict is given after the judge has had lunch.) Secondly, it’s going to be more consistent. I don’t know what it is like in other countries, but in England there seems to be very little rhyme or reason to sentencing. People have been sent to prison for saying unpleasant and provocative things on Facebook, and given a suspended sentence for hitting someone.

On the first point, AI is not objective at all because it has the programmers’ prejudices baked in. In some cases, it has learnt to be prejudiced from the sort of material it’s been trained on.

On the second point, perhaps an AI judge would be more consistent — but you still wouldn’t be able to argue with it. And could it take into account every possible mitigating circumstance?

Perhaps a hybrid solution would work. For example, I sometimes ask AI to draft an assessment task. That saves me hours of work, but then I tweak it and add to it as required by the situation I’m facing. Perhaps a legal AI could work in the same way, that is suggesting an appropriate sentence and then leaving it to the judge to make the final decision.

Anyway, do read the original Dickson story if you can. It was published around sixty years ago, and if anything it is even more relevant today than it was then.

The entire subject of AI makes me uncomfortable. Your post tried to clarify a few things. Glad you got to use the word epistolary. I myself have a whole lot of really cool words left over from my academic career that I cant seem to find a use for anymore. Damn! They are smart ones, too!

Dickson had a long career. He authored the long-running Dorsai series, a fantasy series involving a dragon, and the weird proto-Ewok Hoka series with Poul Anderson.